Erlang GraphQL Tutorial

The guide here is a running example of an API implemented in Erlang through the ShopGun GraphQL engine. The API is a frontend to a database, containing information about the Star Wars films by George Lucas. The intent is to provide readers with enough information they can go build their own GraphQL servers in Erlang.

We use the GraphQL system at https://shopgun.com as a data backend. We sponsor this tutorial as part of our Open Source efforts. We developed this GraphQL system to meet our demands as our system evolves. The world of tracking businesses and offers is a highly heterogeneous dataset, which requires the flexibility of something like GraphQL.

Because GraphQL provides a lot of great tooling, we decided to move forward and implement a server backend for Erlang, which didn’t exist at the time.

At the same time, we recognize other people may be interested in the system and its development. Hence the decision was made to open source the GraphQL parts of the system.

Introduction

The Erlang GraphQL system allows you to implement GraphQL servers in Erlang. It works as a library which you can use on top of existing web servers such as Cowboy, Webmachine, Yaws and so on.

As a developer, you work by providing a schema which defines the query structure which your server provides. Next, you map your schema unto Erlang modules which then defines a binding of the two worlds.

Clients execute queries to the server according to the structure of the schema. The GraphQL system then figures out a query plan for the query and executes the query. This in turn calls your bound modules and this allows you to process the query, load data, and so on.

For a complete list of changes over time to this document, take a look at the Changelog appendix.

On this tutorial

| We are currently building the document and are still making changes to it. Things can still move around and change. If you see a “TBD” marker it means that section is “To Be Done” and will be written at a later point. In the same vein, the code base is being built up as well, so it may not be that everything is fully described yet. |

| The current version of Erlang GraphQL returns some errors which are hard to parse and understand. It is our intention to make the error handling better and more clean in a later version. |

The tutorial you are now reading isn’t really a tutorial per se where you type in stuff and see the output. There is a bit too much code for that kind of exposition. Rather, the tutorial describes a specific project implemented by means of the GraphQL system. You can use the ideas herein to build your own.

There are examples of how things are built however, so you may be able to follow along and check out the construction of the system as a whole. Apart from being a small self-contained functional GraphQL project, it is also a small self-contained functional rebar3 project. So there’s that.

Prerequisites

Some Erlang knowledge is expected for reading this guide. General Erlang concept will not be explained, but assumed to be known. Some Mnesia knowledge will also help a bit in understanding what is going on, though if you know anything about databases in general, that is probably enough. Furthermore, some knowledge of the web in general is assumed. We don’t cover the intricacies of HTTP 1.1 or HTTP/2 for instance.

This tutorial uses a couple of dependencies:

-

Rebar3 is used to build the software

-

Cowboy 1.x is used as a web server for the project

-

GraphiQL is used as a web interface to the Graph System

-

Erlang/OTP version 19.3.3 was used in the creation of this tutorial

Supported Platforms

The GraphQL system should run on any system which can run Erlang. The library does not use any special tooling, nor does it make any assumptions about the environment. If Erlang runs on your platform, chances are that GraphQL will too.

Comments & Contact

The official repository location is

If you have comments on the document or corrections, please open an Issue in the above repository on the thing that is missing. Also, feel free to provide pull requests against the code itself.

Things we are particularly interested in:

-

Parts you don’t understand. These often means something isn’t described well enough and needs improvement.

-

Code sequences that doesn’t work for you. There is often some prerequisite the document should mention but doesn’t.

-

Bad wording. Things should be clear and precise. If a particular sentence doesn’t convey information clearly, we’d rather rewrite it then confuse the next reader.

-

Bugs in the code base.

-

Bad code structure. A problem with a tutorial repository is that it can “infect” code in the future. People copy from this repository, so if it contains bad style, then that bad style is copied into other repositories, infecting them with the same mistakes.

-

Stale documentation. Parts of the documentation which were relevant in the past but isn’t anymore. For instance ID entries which doesn’t work anymore.

License

Copyright © 2017 ShopGun.

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License.

Acknowledgments

-

Everyone involved in the Star Wars API. We use that data extensively.

-

The GraphQL people who did an excellent job at answering questions and provided us with a well-written specification.

-

Josh Price. The parser was derived from his initial work though it has been changed a lot since the initial commit.

Why GraphQL

A worthy question to ask is “Why GraphQL?”

GraphQL is a natural extension of what we are already doing on the Web. As our systems grow, we start realizing our systems become gradually more heterogeneous in the sense that data becomes more complex and data gets more variance.

In addition—since we usually have a single API serving multiple different clients, written in different languages for different platforms—we need to be flexible in query support. Clients are likely to evolve dynamically, non-linearly, and at different paces. Thus, the backend must support evolution while retaining backwards compatibility. Also, we must have a contract or protocol between the clients and the server that is standardized. Otherwise, we end up inventing our own system again and again, and this is a strenuous affair which has little to no reuse between systems.

The defining characteristic of GraphQL is that the system is client-focused and client-centric. Data is driven by the client of the system, and not by the server. The consequence is that the delivery-time for features tend to be shorter. As soon as the product knows what change to make, it can often be handled with less server-side interaction than normally. Especially for the case where you are recombining existing data into a new view.

RESTful APIs have served us well for a long time. And they are likely to continue serving as well in a large number of situations. However, if you have a system requiring more complex interaction, chances are you are better off by taking the plunge and switching your system to GraphQL.

RESTful APIs recently got a powerful improvement in HTTP/2 which allows RESTful APIs to pipeline far better than what they did earlier. However, you still pay the round trip time between data dependencies in an HTTP/2 setting: You need the listing of keys before you can start requesting the data objects on those keys. In contrast, GraphQL queries tend to be a single round-trip only. A full declarative query is formulated and executed, without the need of any intermediate query. This means faster response times. Even in the case where a single query becomes slower since there is no need for followup queries.

A major (subtle) insight is that in a GraphQL server, you don’t have to hand-code the looping constructs which tend to be present in a lot of RESTful APIs. To avoid the round-trip describes in the preceding paragraph, you often resolve to a solution where a specialized optimized query is constructed and added to the system. This specialized endpoint is then looping over the data in one go so you avoid having to do multiple round-trips.

In a GraphQL system, that looping is handled once-and-for-all by the GraphQL engine. You are only implementing callbacks that run as part of the loop. A lot of tedious code is then handled by GraphQL and we avoid having to code this again and again for each RESTful web service we write.

You can often move your system onto GraphQL a bit at a time. You don’t

have to port every endpoint in the beginning. Often, people add some

kind of field, previousId say, which is used as an identifier in the

old system. Then you can gradually take over data from an old system

and port it on top of GraphQL. Once the ball is rolling, it is likely

that more and more clients want to use it, as it is a easier interface

for them to use.

System Tour

Since a system as large as GraphQL can seem incomprehensible when you first use it, we will begin by providing a system tour explaining by example how the system works. In order to start the system for the first time, we must construct a release.

To make a release, run the following command:

$ make releaseThis builds a release inside the _build directory and makes it

available. In order to run the release, we can ask to run it with a

console front-end, so we get a shell on the Erlang system:

$ _build/default/rel/sw/bin/sw consoleThe system should boot and start running. A typical invocation looks like:

Erlang/OTP 19 [erts-8.3] [source] [64-bit] [smp:8:8] [async-threads:30] [hipe] [kernel-poll:true] [dtrace]

15:33:05.705 [info] Application lager started on node 'sw@127.0.0.1'

15:33:05.705 [info] Application ranch started on node 'sw@127.0.0.1'

15:33:05.706 [info] Application graphql started on node 'sw@127.0.0.1'

15:33:05.706 [info] Application sw_core started on node 'sw@127.0.0.1'

15:33:05.706 [info] Application cowboy started on node 'sw@127.0.0.1'

15:33:05.706 [info] Starting HTTP listener on port 17290

Eshell V8.3 (abort with ^G)

(sw@127.0.0.1)1>

To exit an Erlang node like this, you can either Ctrl-C twice

which stops the system abruptly. Or you can be nice to the system and

ask it to close gracefully one application at a time by entering

q().<RET> in the shell.

|

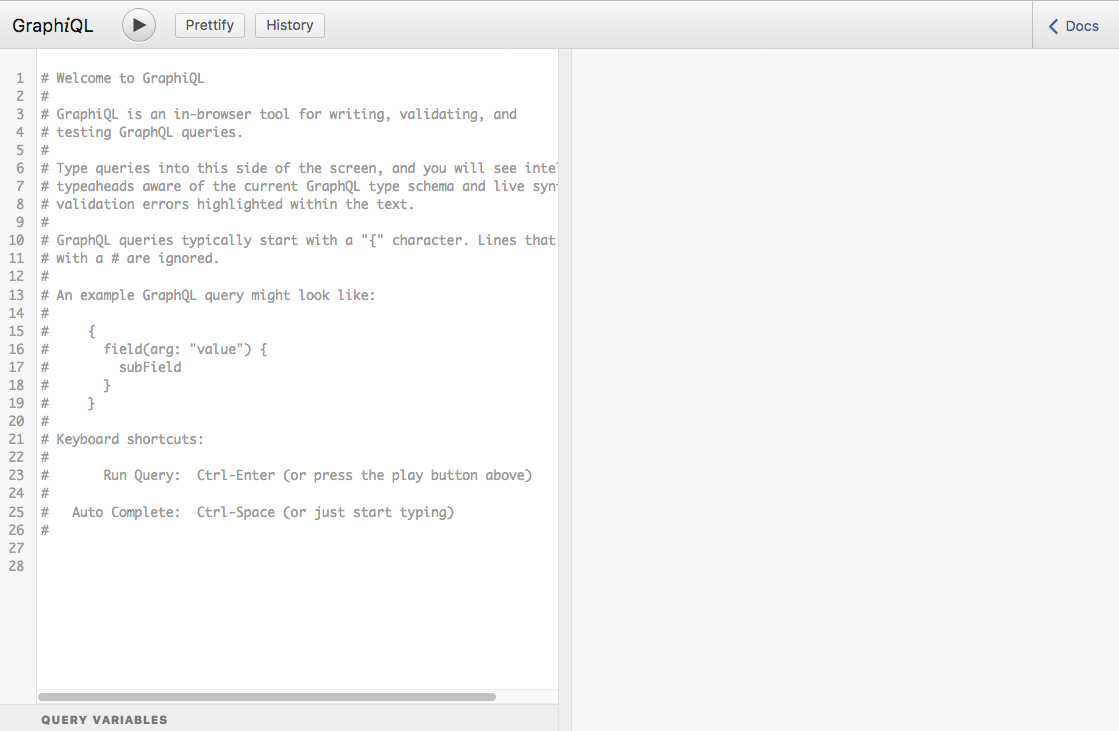

Once the Erlang emulator is running our sw release, we can point a

browser to http://localhost:17290/ and you should be greeted

with the following screen:

First query

The first query we will run requests a given Planet from the system. This query follows a set of rules, the Relay Modern GraphQL conventions. These conventions are formed by Facebook as part of their Relay Modern system. It defines a common set of functionality on top of the GraphQL system which clients can rely on.

In particular, our first query uses the rules of Object Identification which is a way to load an object for which you already know its identity. A more complete exposition of the conventions are in the section Relay Modern, but here we skip the introduction for the sake of brevity:

query PlanetQuery {

node(id:"UGxhbmV0OjE=") { (1)

... on Planet { (2)

id (3)

name

climate

}

}

}| 1 | The ID entered here is opaque to the client, and we assume it was obtained in an earlier query. We will show typical ways to list things later in this section. |

| 2 | This notation, if you are only slightly familiar with GraphQL is

called an inline fragment. The output of the node field is of

type Node and here we restrict ourselves to the type Planet. |

| 3 | This requests the given fields in the particular planet we loaded. |

If you enter this in the GraphiQL left window and press the “Run” button, you should get the following response:

{

"data": {

"node": {

"climate": "arid",

"id": "UGxhbmV0OjE=",

"name": "Tatooine"

}

}

}Note how the response reflects the structure of the query. This is a powerful feature of GraphQL since it allows you to build up queries client side and get deterministic results based off of your query-structure.

More advanced queries

Let us look at a far more intricate query. In this query, we will also request a planet, but then we will ask “what films does this planet appear in?” and we will ask “Who are the residents on the planet?”--who has the planet as their homeworld?.

To do this, we use pagination. We ask for the first 2 films and the first 3 residents. We also ask for the relevant meta-data of the connections as we are here:

query Q {

node(id:"UGxhbmV0OjE=") {

... on Planet {

id

name

climate

filmConnection(first: 2) {

totalCount

pageInfo {

hasNextPage

hasPreviousPage

}

edges {

node {

...Films

}

cursor

}

}

residentConnection(first: 3) {

totalCount

pageInfo {

hasNextPage

hasPreviousPage

}

edges {

node {

...Residents

}

cursor

}

}

}

}

}

fragment Films on Film {

id

title

director

}

fragment Residents on Person {

id

name

gender

}The fragment parts allows your queries to re-use different subsets

of a larger query again and again. We use this here to show off that

capability of GraphQL. The result follows the structure of the query:

{

"data": {

"node": {

"climate": "arid",

"filmConnection": {

"edges": [

{

"cursor": "MQ==",

"node": {

"director": "George Lucas",

"id": "RmlsbTox",

"title": "A New Hope"

}

},

{

"cursor": "Mg==",

"node": {

"director": "Richard Marquand",

"id": "RmlsbToz",

"title": "Return of the Jedi"

}

}

],

"pageInfo": {

"hasNextPage": true,

"hasPreviousPage": false

},

"totalCount": 5

},

"id": "UGxhbmV0OjE=",

"name": "Tatooine",

"residentConnection": {

"edges": [

{

"cursor": "MQ==",

"node": {

"gender": "n/a",

"id": "UGVyc29uOjg=",

"name": "R5-D4"

}

},

{

"cursor": "Mg==",

"node": {

"gender": "male",

"id": "UGVyc29uOjEx",

"name": "Anakin Skywalker"

}

},

{

"cursor": "Mw==",

"node": {

"gender": "male",

"id": "UGVyc29uOjE=",

"name": "Luke Skywalker"

}

}

],

"pageInfo": {

"hasNextPage": true,

"hasPreviousPage": false

},

"totalCount": 10

}

}

}

}Simple Mutations

Now, let us focus on altering the database through a mutation. In GraphQL, this is the way a client runs “stored procedures” on the Server side. The Star Wars example has tooling for factions in the Star Wars universe, but there are currently no factions defined. Let us amend that by introducing the rebels:

mutation IntroduceFaction($input: IntroduceFactionInput!) {

introduceFaction(input: $input) {

clientMutationId

faction {

id

name

ships {

totalCount

}

}

}

}This query uses the GraphQL feature of input variables. In the UI, you can click and expand the section Query Variables under the query pane. This allows us to build a generic query like the one above and then repurpose it for creating any faction by providing the input variables for the query:

{

"input": {

"clientMutationId": "D9A5939A-DF75-4C78-9B32-04C1C64F9D9C", (1)

"name": "Rebels"

}

}| 1 | This is chosen arbitrarily by the client and can be any string. Here we use an UUID. |

The server, when you execute this query, will respond with the creation of a new Faction and return its id, name and starships:

{

"data": {

"introduceFaction": {

"clientMutationId": "D9A5939A-DF75-4C78-9B32-04C1C64F9D9C", (1)

"faction": {

"id": "RmFjdGlvbjoxMDAx", (2)

"name": "Rebels",

"ships": {

"totalCount": 0 (3)

}

}

}

}

}| 1 | The server reflects back the unique client-generated Id for correlation purposes. |

| 2 | The Id migth be different depending on how many Faction objects you created. |

| 3 | We have yet to assign any starships to the faction, so the count is currently 0. |

We can now query this faction by its Id because it was added to the system:

query FactionQuery {

node(id: "RmFjdGlvbjoxMDAx") {

... on Faction {

id

name

}

}

}The system also persisted the newly created faction in its database so restarting the system keeps the added faction.

Use q() in the shell to close the system gracefully.

Otherwise you may be in a situation where a change isn’t reflected on

disk. The system will still load a consistent view of the database,

but it will be from before the transaction were run. The Mnesia system

used is usually quick at adding data to its WAL, but there is no

guarantee.

|

More complex mutations

With the rebels in the Graph, we can now create a new Starship, a B-Wing, which we will add to the graph. We will also attach it to the newly formed faction of Rebels. The mutation here exemplifies operations in which you bind data together in GraphQL. Our mutation looks like:

mutation IntroduceBWing {

introduceStarship(input:

{ costInCredits: 5.0, (1)

length: 20.0,

crew: "1",

name: "B-Wing",

faction: "RmFjdGlvbjoxMDAx", (2)

starshipClass: "fighter"}) {

starship {

id

name

}

faction {

id

name

ships {

totalCount

edges {

node {

id name

}

}

}

}

}

}| 1 | The values here are not for a “real” B-wing fighter, but are just made up somewhat arbitrarily. |

| 2 | The ID of the Faction. If you run this the ID may be a bit different so make sure you get the right ID here. |

We create a new Starship, a B-wing, in the Rebels faction. Note the resulting object, IntroduceStarshipPayload, contains the newly created Starship as well as the Faction which was input as part of the query. This is common in GraphQL: return every object of interest as part of a mutation.

The result of the query is:

{

"data": {

"introduceStarship": {

"faction": {

"id": "RmFjdGlvbjoxMDAx",

"name": "Rebels",

"ships": {

"edges": [

{

"node": {

"id": "U3RhcnNoaXA6MTAwMQ==",

"name": "B-Wing"

}

}

],

"totalCount": 1

}

},

"starship": {

"id": "U3RhcnNoaXA6MTAwMQ==",

"name": "B-Wing"

}

}

}

}Note how the newly formed starship is now part of the Rebel factions

starships, and that the total count of starships in the Faction is now

1. The created field on the Starship is automatically generated by

the system as part of introducing it.

Note: Not all the fields on the newly formed starship are "valid" insofar we decided to reduce the interface here in order to make it easier to understand in the tutorial. A more complete solution would force us to input every field on the Starship we just introduced and also use sensible defaults if not given.

This tutorial

This tutorial will tell you how to create your own system which can satisfy queries as complex and complicated as the examples we just provided. It will explain the different parts of the GraphQL system and how you achieve the above.

Getting Started

This tutorial takes you through the creation of a GraphQL server implementing the now ubiquitous Star Wars API. This API was created a couple of years ago to showcase a REST interface describing good style for creation of APIs. The system revolves around a database containing information about the Star Wars universe: species, planets, starships, people and so on.

GraphQL, when it was first released, ported the Star Wars system from REST to GraphQL in order to showcase how an API would look once translated. Because of its ubiquity, we have chosen to implement this schema in the tutorial you are now reading:

-

It is a small straightforward example. Yet it is large enough that it will cover most parts of GraphQL.

-

If the reader is already familiar with the system in another GraphQL implementation, it makes pickup of Erlang GraphQL faster.

-

We can use a full system as a driving example for this tutorial.

-

If Erlang GraphQL has a bug, it may be possible to showcase the bug through this repository. This makes it easier to work on since you have immediate common ground.

The goal of the tutorial is to provide a developer with a working example from which you can start. Once completed, you can start adding your own types to the tutorial. And once they start working, you can "take over" the system and gradually remove the Star Wars parts until you have a fully working example.

This implementation backs the system by means of a Mnesia database. The choice is deliberate for a couple of reasons:

-

Mnesia is present in any Erlang system and thus it provides a simple way to get started and setup.

-

Mnesia is not a Graph Database. This makes it explicit your database can be anything. In fact, the "Graph" in GraphQL is misnomer since GraphQL works even when your data does not have a typical Graph-form. It is simply a nice query structure.

What we do not cover

This tutorial doesn’t cover everything in the repository:

-

The details of the

rebar3integration and therelxrelease handling. -

The tutorial only covers the parts of the code where there is something to learn. The areas of the code getting exposition in this document is due to the fact that they convey some kind of important information about the use of the GraphQL system for Erlang. Other parts, which are needed for completeness, but aren’t as important are skipped.

-

There is no section on “how do I set up an initial Erlang environment” as it is expected to be done already.

Overview

The purpose of a GraphQL server is to provide a contract between a client and a server. The contract ensures that the exchange of information follows a specific structure, and that queries and responses are in accordance with the contract specification.

Additionally, the GraphQL servers contract defines what kind of queries are possible and what responses will look like. Every query and response is typed and a type checker ensures correctness of data.

Finally, the contract is introspectable by the clients. This allows automatic deduction of queries and built-in documentation of the system interface.

Thus, a GraphQL server is also a contract checker. The GraphQL system ensures that invalid queries are rejected, which makes it easier to implement the server side: you can assume queries are valid to a far greater extent than is typical in other systems such as typical REST interfaces.

Plan

In order to get going, we need a world in which to operate. First, we must provide two schemas: one for the GraphQL system, and one for the Mnesia database.

The GraphQL schema defines the client/server contract. It consists of several GraphQL entity kinds. For example:

-

Scalar types—Extensions on top of the default types. Often used for Dates, DateTimes, URIs, Colors, Currency, Locales and so on.

-

Enumerations—Values taken from a limited set. An example could be the enumeration of weekdays: “MONDAY, TUESDAY, WEDNESDAY, …, SUNDAY”.

-

Input Objects—Data flowing from the Client to the Server (Request).

-

Output Objects—Data flowing from the Server to the Client (Response).

A somewhat peculiar choice by the GraphQL authors is that the world of Input and Output objects differ. In general, a Client has no way to "PUT" an input object back into the Graph as is the case in REST systems. From a type-level perspective, client requests and server responses have different polarity.

It may seem as if this is an irritating choice. You often have to specify the “same” object twice: once for input and once for output. However, as your GraphQL systems grows in size, it turns out this choice is the right one. You quickly run into situations where a client supplies a desired specific change where many of the fields on the output object doesn’t make sense. By splitting the input and output world, it is easy to facilitate since the input objects can omit many fields that doesn’t make sense.

In a way, your GraphQL system is built such that changes to the data is done by executing “transactions” through a set of stored procedures. This can be seen as using the "`PATCH`" method of RESTful interfaces and not having a definition of PUT.

GraphQL splits the schema into two worlds: query and mutation. The difference from the server side is mostly non-existent: the GraphQL system is allowed to parallelize queries but not mutations. But from the perspective of the client, the starting points in the graph is either the query or the mutation object.

GraphQL implements what is essentially CQRS by making a distinction between the notion of a query and a mutation. Likewise, the server side makes this distinction. But on the server side it is merely implemented by having different starting objects in the graph execution.

Our Star Wars schema uses the database Mnesia as a backend. It is important to stress that you often have a situation where your database backend doesn’t map 1-1 onto your specified GraphQL schema. In larger systems, this is particularly important: the GraphQL schema is often served by multiple different backends, and those backends are not going to cleanly map onto the world we expose to the clients. So the GraphQL schema contract becomes a way to mediate between the different data stores. As an example, you may satisfy some parts of the GraphQL query from a dedicated search system—such as ElasticSearch—while others are served as rows from a traditional database, such as MySQL or Postgresql. You may even have a message queue broker or some other subsystem in which you have relevant data you want to query. Or perhaps, some queries are handled by micro-services in your architecture.

Over the course of having built larger systems, we’ve experienced that mappings which tries to get isomorphism between the backend and the schema creates more problems than they solve. Small changes have consequence in all of the stack. Worse, you can’t evolve part of the system without evolving other parts which impairs the flexibility of the system.

Another problem is that you may end up with an impedance mismatch between the Objects and links of the GraphQL query and the way you store your data in the backend. If you force a 1-1 relationship between the two, you can get into trouble because your GraphQL schema can’t naturally describe data.

A common problem people run into with Mnesia is how to “get started”. What people often resort to are solutions where an initial database is created if it doesn’t exist. These solutions are often brittle.

Here, we pick another solution. A helper can create a database schema for us, with all the necessary tables. The real release assumes the presence of an initial database and won’t boot without one. This means the Erlang release is simpler. There is always some database from which it can boot and operate. That database might be the empty database since we are just starting out. But in particular, the release won’t concern itself with creating an initial database. Rather it will assume one is already existing.

The situation is not much different than using a traditional schema-oriented database. Usually, you have to create the database first, and then populate the schema with some initial data. It is just because of Rails/Django like systems in which databases are migrate-established, we’ve started using different models.

Mnesia

Setting up an initial Mnesia schema

To get up and running, we begin by constructing a Mnesia schema we can start from. We do this by starting a shell on the Erlang node and then asking it to create the schema:

$ git clean -dfxq (1)

$ make compile (2)

$ make shell-schema (3)

erl -pa `rebar3 path` -name sw@127.0.0.1

Erlang/OTP 19 [erts-8.3] [source] [64-bit] [smp:8:8] [async-threads:10] [hipe] [kernel-poll:false] [dtrace]

Eshell V8.3 (abort with ^G)

1> sw_core_db:create_schema(). % (4)| 1 | Clean out the source code repository to make sure there is no lingering files: Caution when using this command as it could potentially delete files if used in wrong directory. |

| 2 | Compile the code so we have compiled versions of modules we can loaded |

| 3 | Run the Erlang interpreter with an altered path for our newly compiled modules |

| 4 | Create the schema |

The call create_schema() runs the following schema creation code:

create_schema() ->

mnesia:create_schema([node()]),

application:ensure_all_started(mnesia),

ok = create_fixture(disc_copies, "fixtures"),

mnesia:backup("FALLBACK.BUP"),

mnesia:install_fallback("FALLBACK.BUP"),

application:stop(mnesia).

create_fixture(Type, BaseDir) ->

ok = create_tables(Type),

ok = populate_tables(BaseDir),

ok.Creating the schema amounts to running a set of commands from the Mnesia documentation. The helper function to create tables contains a large number of tables, so we are just going to show two here:

create_tables(Type) ->

{atomic, ok} =

mnesia:create_table(

starship,

[{Type, [node()]},

{type, set},

{attributes, record_info(fields, starship)}]),

{atomic, ok} =

mnesia:create_table(

species,

[{Type, [node()]},

{type, set},

{attributes, record_info(fields, species)}]),In Mnesia, tables are Erlang records. The #planet{} record needs

definition and is in the header file sw_core_db.hrl. We simply list

the entries which are defined the SWAPI GraphQL schema so we can store

the concept of a planet in the system:

-record(planet,

{id :: integer(),

edited :: calendar:datetime(),

climate :: binary(),

surface_water :: integer(),

name :: binary(),

diameter :: integer(),

rotation_period :: integer(),

created :: calendar:datetime(),

terrains :: [binary()],

gravity :: binary(),

orbital_period :: integer() | nan,

population :: integer() | nan

}).Every other table in the system is handled in the same manner, but are not given here for brevity. They follow the same style as the example above.

Populating the database

Once we have introduced tables into the system, we can turn our

attention to populating the database tables. For this, we use the

SWAPI data set as the primary data source. This set has its fixtures

stored as JSON document. So we use jsx to decode those JSON

documents and turn them into Mnesia records, which we then insert into

the database.

We can fairly easily write a transformer function which take the JSON

terms and turn them into appropriate Mnesia records. Planets live in a

fixture file planets.json, which we can read and transform. Some

conversion is necessary on the way since the internal representation

differ slightly from the representation in the fixture:

json_to_planet(

#{ <<"pk">> := ID,

<<"fields">> := #{

<<"edited">> := Edited,

<<"climate">> := Climate,

<<"surface_water">> := SWater,

<<"name">> := Name,

<<"diameter">> := Diameter,

<<"rotation_period">> := RotationPeriod,

<<"created">> := Created,

<<"terrain">> := Terrains,

<<"gravity">> := Gravity,

<<"orbital_period">> := OrbPeriod,

<<"population">> := Population

}}) ->

#planet {

id = ID,

edited = datetime(Edited),

climate = Climate,

surface_water = number_like(SWater),

name = Name,

diameter = number_like(Diameter),

rotation_period = number_like(RotationPeriod),

created = datetime(Created),

terrains = commasplit(Terrains),

gravity = Gravity,

orbital_period = number_like(OrbPeriod),

population = number_like(Population)

}.Once we have this function down, we can utilize it to get a list of Mnesia records, which we can then insert into the database through a transaction:

populate_planets(Terms) ->

Planets = [json_to_planet(P) || P <- Terms],

Txn = fun() ->

[mnesia:write(P) || P <- Planets],

ok

end,

{atomic, ok} = mnesia:transaction(Txn),

ok.The code to read in and populate the database is fairly straightforward. It is the last piece of the puzzle to inject relevant data into the Mnesia database:

populate(File, Fun) ->

{ok, Data} = file:read_file(File),

Terms = jsx:decode(Data, [return_maps]),

Fun(Terms).

populate_tables(BaseDir) ->

populate(filename:join(BaseDir, "transport.json"),

fun populate_transports/1),

populate(filename:join(BaseDir, "starships.json"),

fun populate_starships/1),

populate(filename:join(BaseDir, "species.json"),

fun populate_species/1),

populate(filename:join(BaseDir, "films.json"),

fun populate_films/1),

populate(filename:join(BaseDir, "people.json"),

fun populate_people/1),

populate(filename:join(BaseDir, "planets.json"),

fun populate_planets/1),

populate(filename:join(BaseDir, "vehicles.json"),

fun populate_vehicles/1),

setup_sequences(),

ok.This creates a fixture in the database such that when we boot the database, the planets, transports, people, …, will be present in the Mnesia database when we boot the system.

Creating a FALLBACK for the database

Once we have run the schema creation routine, a file called

FALLBACK.BUP is created. We copy this to the database base core in

the repository

$ cp FALLBACK.BUP db/FALLBACK.BUPwhich makes the empty schema available for the release manager of the

Erlang system. When we cook a release, we will make sure to copy this

initial schema into the correct Mnesia-directory of the release.

Because the file is named FALLBACK.BUP, it is a fallback backup file.

This will “unpack” itself to become a new empty database as if you

had rolled in a backup on the first boot of the system. Thus we avoid

our system having to deal with this problem at start up.

A real system will override the location of the Mnesia dir

parameter and define a separate directory from which the Mnesia

database will run. Initially, the operator will place the

FALLBACK.BUP file in this directory to get going, but once we are

established, and people start adding in data, we can’t reset anything

when deploying new versions. Hence the separate directory so we can

upgrade the Erlang system without having to protect the database as

much.

|

We now have the ability to create new database tables easily and we have a Mnesia database for backing our data. This means we can start turning our attention to the GraphQL schema.

GraphQL Schema

With a Mnesia database at our disposal, we next create a GraphQL schema definition. This file describes the contract between the client system and the server system. It is used by the GraphQL system to know which queries are valid and which aren’t.

In accordance with OTP design principles, we place this schema inside

a projects priv directory. Our GraphQL system can then refer to the

private directory of the application in order to load this schema when

the system boots up.

Identity encoding

In GraphQL, you have a special type, ID, which is used to attach an identity to objects. It is typically used as a globally unique identifier for each object in the graph. Even across different object types; no two objects share a ID, even if they are of different type.

The ID is always generated by the server. Hence, a client must only treat an ID as an opaque string value and never parse on the string value. It can only return the ID value back to the server later on.

To make this more obvious, GraphQL implementations usually base64 encode

their ID-values. In Mnesia, our rows IDs will be integers, and they

will overlap between different types/tables in the database. Since IDs

should be globally unique, we use an encoding in GraphQL. The Starship

with id 3 will be encoded as base64("Starship:3"). And the planet

Tatooine taken from the System Tour is encoded as

base64("Planet:1"). This definition somewhat hides the

implementation and also allows the server backend to redefine IDs

later for objects. Another use of the encoding is that it can define

what datasource a given came from, so you can figure out where to find

that object. It is highly useful in migration scenarios.

The encoder is simple because we can assume the server provides valid values:

encode({Tag, ID}) ->

BinTag = atom_to_binary(Tag, utf8),

IDStr = integer_to_binary(ID),

base64:encode(<<BinTag/binary, ":", IDStr/binary>>).The decoder is a bit more involved. It requires you to fail on invalid inputs. We usually don’t need to know what was invalid. We can simply fail aggressively if things turns out bad. A debugging session will usually uncover the details anyway as we dig into a failure.

decode(Input) ->

try

Decoded = base64:decode(Input),

case binary:split(Decoded, <<":">>) of

[BinTag, IDStr] ->

{ok, {binary_to_existing_atom(BinTag, utf8),

binary_to_integer(IDStr)}};

_ ->

exit(invalid)

end

catch

_:_ ->

{error, invalid_decode}

end.The Node Interface

The Relay Modern specification (see Relay Modern) contains a standard for an Object Identification interface. Our schema implements this standard in order to make integration simpler for clients supporting the standard. The interface, also called the Node interface because of its type, allows you to “start from” any node in the graph which has an id field. That is, every node with identity can be a starting point for a query.

The interface is most often used as a way to refresh objects in a client cache. If the client has a stale object in the cache, and the object has identity, then you can refresh a subset of the graph, setting out from that object.

The interface specification follows the standard closely:

+description(text: "Relay Modern Node Interface")

interface Node {

+description(text: "Unique Identity of a Node")

id : ID!

}The Erlang version of GraphQL allows a certain extension by the

Apollo Community @ Github, who are

building tools GraphQL in general. This extension allows you to use

Annotations in GraphQL schemas to attach more information to

particular objects of interest. We use this for documentation. You can

annotate almost anything with +description(text: "documentation")

which in turn attaches that documentation to an entity in the Graph.

Multi-line comments are also possible by using a backtick (`) rather than a quote symbol ("). These allows larger Markdown entries to be placed in the documentation, which tends to be good for documentation of APIs.

| You can’t easily use a backtick (`) inside the multiline quotations. This means you can’t easily write pre-formatted code sections unless you use indentation in the Markdown format. The choice was somewhat deliberate in that there is a workaround currently, and it tends to flow really well when you enter documentation by means of the backtick character. A future version of the parser might redo this decision. |

Object types

We follow the specification for describing object types. Thus, if you

describe an object like a starship as type Starship { … } the

system knows how to parse and internalize such a description. In the

following, we don’t cover all of the schema, but focus on a single

type in order to describe what is going on.

Planets

Since we have planets in the Mnesia database from the previous section, we can define the GraphQL Schema for them as well. The definition is quite straightforward given the Star Wars API we are trying to mimic already contains all the important parts.

For brevity, we omit the documentation of each individual field for now. Though a more complete implementation would probably include documentation on each field to a fine detail.

type Planet implements Node {

name : String

diameter : Int

rotationPeriod : Int

orbitalPeriod : Int

gravity : String

population : Int

climate : String

terrains : [String]

surfaceWater : Int

filmConnection(after: String, first: Int,

before: String, last: Int)

: PlanetFilmsConnection

residentConnection(after: String, first: Int,

before: String, last: Int)

: PlanetResidentsConnection

created : DateTime

edited : DateTime

id : ID!

}Queries & Mutations

Query Object

All GraphQL queries are either a query or a mutation.[1]

Correspondingly, the schema specification contains entries for two

(output) objects, which are commonly called Query and Mutation

respectively. For example, the query object looks like:

type Query {

+description(text: "Relay Modern specification Node fetcher")

node(id : ID!) : Node

+description(text: "Fetch a starship with a given Id")

starship(id : ID!) : Starship

allStarships : [Starship]

allPlanets : [Planet]

allPeople : [Person]

allVehicles : [Vehicle]

allSpecies : [Species]

allFilms : [Film]

filmByEpisode(episode: Episode) : Film!

}The Query object defines the (public) query/read API of your backend. All queries will start from here, and the specification defines what you can do with the given query.

| The introspection capabilities of GraphQL will have the astute reader recognize that this predefined rule of what you can do with a query is very close to automatic discovery of capabilities. In other words, you get close to the notion of Hypertext as the engine of application state, while not reaching it. |

The field node allows you to request any node in the Graph. Later,

we will see how to implement the backend functionality for this call.

In this example, we will request a Starship through the node

interface by first requesting anything implementing the Node

interface, and then use an inline-fragment in order to tell the system

which fields we want to grab inside the starship:

query StarShipQuery($id : ID!) {

node(id: $id) {

__typename

id

... on Starship {

model

name

}

}

}Fields such as allStarships are in the schema in order to make it

easier to peruse the API. In a real system, such a query would be

dangerous since it potentially allows you to request very large data

sets. In practice you would use pagination or use a search engine to

return such results. Specifically, you would never return all possible

values, but only a subset thereof.

Mutation Object

The Query object concerns itself about reading data. The Mutation object is for altering data. In a CQRS understanding, the Mutation object is the command.

GraphQL treats mutations the same way as queries except for one subtle detail: queries may be executed in parallel on the server side whereas a mutation may not. Otherwise, a mutation works exactly like a query.

Because queries and mutations are the same for the GraphQL engine, we need to build up conventions in the mutation path in order to make things work out. First, since mutations starts off from the Mutation object, we can add fields to that object which isn’t present in the Graph otherwise. This allows us to execute transactions at the server side for changes.

Each transaction becomes a field on the Mutation object. And the

result type of the given field is the return value of the mutation.

This return type, often called the payload contains other objects in

the graph. It allows a client to run a mutation on the server side and

then query on the data it just changed. This corresponds to the

situtation in RESTful models where a POST provides a location:

response header containing the URI of the newly created object. But as

we want to avoid a roundtrip, we “bake” the query into the mutation

in GraphQL.

type Mutation {

introduceFaction(input: IntroduceFactionInput!)

: IntroduceFactionPayload

introduceStarship(input: IntroduceStarshipInput!)

: IntroduceStarshipPayload

}Our mutation object grants a client access to a number of commands it

can execute. First, it can create new factions by means of the

introduceFaction field on the object. It can also create a new

Starship through the introduceStarship field.

Both fields take a single parameter, input of a given type (which we

will explain later). It provides data relevant to the given mutation. The

return value is a regular output type in the graph, for instance

IntroduceStarshipPayload:

type IntroduceStarshipPayload {

clientMutationId : String

faction : Faction

starship : Starship

}It is common that a payload-style object returns every object which

was manipulated as part of the update. In this case, since starship

introduction creates a new starship and refers to a faction, we return

the fields starship and faction. It allows a client to execute

queries pertaining to the current situation. Suppose for example we

add a new starship to a faction. We’d like the starship count of that

faction. This is easy due to the payload object. Factions have a

StarshipConnection, so we can just utilize that to get the count:

mutation IF($input : IntroduceStarshipInput!) {

introduceStarship(input: $input) {

starship {

id

name

}

faction {

id

name

ships {

totalCount

}

}

}

}It is typical use to get “cross-talk” updates like this in GraphQL when you refresh objects in the cache, or when you change the data through a mutation.

The mutations given here follow the conventions Facebook has defined for Relay Modern and a further treatment is given in the section Inputs & Payloads.

Input objects

The type system of GraphQL is “moded” as in Prolog/Twelf/Mercury or is “polarized” in the sense of type systems and semantics. Some data has “positive” mode/polarity and flows from the client to the server. Some data has “negative” mode/polarity and flows from the server to the client. Finally, some data are unmoded and can flow in either direction.

GraphQL distinguishes between input and output types. An input is positive and an output is negative. While at first, this might seem like a redundant and bad idea, our experience is that it helps a lot once your system starts to grow.

In GraphQL, the way you should think about Mutations are that they are

“stored procedures” in the backend you can execute. You don’t in

general get access to a command which says “PUT this object back”.

Rather, you get access to a mutation which alters the object in some

predetermined way: favoriteStarship(starshipId: ID!) for instance.

Because of this, GraphQL is built to discriminate between what types of data the client can send and what types of data the server can respond with.

Input and output objects are subject to different rules. In

particular, there is no way to input a null value in an input. The

way a client inputs a no value input is by omission of the input

parameter in question. This choice simplifies clients and servers.

There are times where the client wants to input a number of values at the same time. You could add more parameters to the field:

type Mutation {

introduceStarship(name: String,

class: String,

manufacturers: [String], ....)

: IntroduceStarshipPayload

}but this often gets unwieldy in the longer run. Rather than this, you can also solve the problem by creating an input object which only works in the input direction:

input IntroduceStarshipInput {

clientMutationId : String

name : String

model : String

starshipClass : String!

manufacturers : [String] = [] (1)

costInCredits : Float!

length : Float!

crew : String!

faction : ID!

}| 1 | Default parameter value, see Schema default values. |

In turn, the client can now input all these values as one. Grouping of like parameters are quite common in GraphQL schemas. Note how input object fields are often vastly different from the output object it corresponds to. This is the reason why having two worlds—input & output—is useful.

| The input object presented here doesn’t contain a full starship input. In a full solution, you would provide means to add in pilots, films, hyperdrive ratings and so on. |

It is possible to define default parameters in the schema as well, as seen in the above example. This is highly useful as a way to simplify the backend of the system. By providing sensible defaults, you can often avoid a specialized code path which accounts for an unknown value. It also simplifies the client as it doesn’t have to input all values unless it wants to make changes from the defaults. Finally it documents, to the client, what the defaults are.

clientMutationId

By now, you have seen that an input object has a clientMutationId

and the corresponding payload also has a clientMutationId. This is

part of Relay Modern conventions.

Every …Input object in a mutation contains a field clientMutationId

which is an optional string. The server reflects the contents of

clientMutationId back to the client in its …Payload response. It

is used by clients to determine what request a given response pertains

to. The client can generate a UUID say, and send it round-trip to the

server. It can then store a reference to the UUID internally. Once the

response arrives, it can use the clientMutationId as a correlation

system and link up which request generated said response.

The solution allows out-of-order processing of multiple mutations at the same time by the client. In an environment where you have no true parallelism and have to simulate concurrency through a continuation passing style, it tends to be useful.

We will later on see how to implement a “middleware” which handles the mutation IDs globally in the system once and for all.

Interfaces & Unions

In GraphQL, an interface is a way to handle the heterogeneous nature of

modern data. Several objects may share the same fields with the same

types. In this case, we can provide an interface for those fields

they have in common.

Likewise, if we have two objects which can logically be the output of

a given field, we can use a union to signify that a set of disparate

objects can be returned.

In the case of an interface, the client is only allowed to access the fields in the interface, unless it fragment-expands in order to be more specific. For unions, the client must fragment expand to get the data.

The Star Wars Schema has an obvious example of an interface via the Node specification above, but there is another interface possible in the specification: both Starship and Vehicle shares a large set of data in the concept of a Transport. We can arrange for this overlap by declaring an interface for transports:

interface Transport {

id : ID!

edited : DateTime

consumables : String

name : String

created : DateTime

cargoCapacity : Float

passengers : String

maxAtmospheringSpeed : Int

crew : String

length : Float

model : String

costInCredits : Float

manufacturers : [String]

}And then we include the interface when we declare either a starship or a vehicle. Here we use the Starship as an example:

+description(text: "Representation of Star Ships")

type Starship implements Node, Transport {

id : ID!

name : String

model : String

starshipClass : String

manufacturers : [String]

costInCredits : Float

length : Float

crew : String

passengers : String

maxAtmospheringSpeed : Int

hyperdriveRating : Float

MGLT : Int

cargoCapacity : Float

consumables : String

created: DateTime

edited: DateTime

...Interfaces and Unions are so-called abstract types in the GraphQL specification. They never occur in concrete data, but is a type-level relationship only. Thus, when handling an interface or a union, you just return concrete objects. In the section Type Resolution we will see how the server will handle abstract types.

| If you have an object which naturally shares fields with other objects, consider creating an interface—even in the case where you have no use for the interface. Erlang GraphQL contains a schema validator which will validate your fields to be in order. It is fairly easy to mess up schema types in subtle ways, but if you write them down, the system can figure out when you make this error. |

Schema default values

In the schema, it is possible to enter default values: field : Type =

Default. We already saw an example of this in the section on inputs

for introducing starships.

Defaults can be supplied on input types in general. So input objects and field arguments can take default values. When a client is omitting a field or a parameter, it is substituted according to GraphQL rules for its input default.

This is highly useful because you can often avoid code paths which

fork in your code. Omission of an array of strings, say, can be

substituted with the default [] which is better than null in

Erlang code in many cases. Default counters can be initialized to some

zero-value and so on. Furthermore, in the schema a default will be

documented explicitly. So a client knows what the default value is and

what is expected. We advise programmers to utilize schema defaults

whenever possible to make the system easier to work with on the client

and server side.

Schema defaults must be type-valid for the type they have. You can’t default a string value in for an integer type for instance. Also, the rule is that non-null yields to default values. If a default value is given, it is as if that variable is always given (See the GraphQL specification on coercion of variable values).

Loading the Schema

In order to work with a schema, it must be loaded. We can load it as

part of booting the sw_core application in the system. After having

loaded the supervisor tree of the application, we can call out and

load the star wars schema into the system. The main schema loader is

defined in the following way:

load_schema() ->

{ok, SchemaFile} = application:get_env(sw_core, schema_file),

PrivDir = code:priv_dir(sw_core),

{ok, SchemaData} = file:read_file(

filename:join(PrivDir, SchemaFile)),

Mapping = mapping_rules(),

ok = graphql:load_schema(Mapping, SchemaData),

ok = setup_root(),

ok = graphql:validate_schema(),

ok.To load the schema, we figure out where it is in the file system. The

schema to load is in an environment variable inside sw_core.app, and

we let OTP figure out where the applications private directory is.

Then the schema is loaded according to the mapping rules of the

schema.

After the schema loads, we set up a schema root which is how to start out a query or a mutation. Finally, we validate the schema. This runs some correctness checks on the schema and fails if the sanity checks don’t pass. It forces you to define everything you use, and it also verifies that interfaces are correctly implemented.

| Currently, the schema root is set up “manually” outside the schema definition. It is likely that a later version of the implementation will be able to do this without manually injecting the root, but by having the root being part of the schema definition. |

| Always run the schema validator once you’ve finished assembling your schema. Many errors are caught automatically by the validator, and it removes the hassle of debugging later. Also, it runs fairly quickly, so run it as part of your system’s boot phase. This ensures your system won’t boot if there is some kind of problem with your schema definition. If you have a boot-test as part of your testing framework or CI system, you should be able to use this as a “schema type checker” and weed out some obvious definitional bugs. |

Root setup

The root setup defines how a query begins by defining what type in the

schema specification is the root for queries and mutations

respectively. By convention, these types are always called Query and

Mutation so it is easy to find the Root’s entry points in the

Graph.

The query root must be injected into the schema so the GraphQL system

knows where to start. This is done in the file sw_core_app in the

function setup_root:

setup_root() ->

Root = {root,

#{ query => 'Query',

mutation => 'Mutation',

interfaces => ['Node']

}},

ok = graphql:insert_schema_definition(Root),

ok.Mapping rules

The mapping rules of the GraphQL system defines how the types in the

schema maps onto erlang modules. Since many mapping can be coalesced

into one, there is the possibility of defining a default mapping

which just maps every unmapped object to the default.

All of the mappings goes from an atom, which is the type in the Schema you want to map. To an atom, which is the Erlang module handling that particular schema type.

mapping_rules() ->

#{

scalars => #{ default => sw_core_scalar },

interfaces => #{ default => sw_core_type },

unions => #{ default => sw_core_type },

enums => #{ 'Episode' => sw_core_enum,

default => sw_core_enum },

objects => #{

'Planet' => sw_core_planet,

'Film' => sw_core_film,

'Species' => sw_core_species,

'Vehicle' => sw_core_vehicle,

'Starship' => sw_core_starship,

'Person' => sw_core_person,

'Faction' => sw_core_faction,

'Query' => sw_core_query,

'Mutation' => sw_core_mutation,

default => sw_core_object }

}.Scalars

Every scalar type in the schema is mapped through the scalar mapping

part. It is quite common a system only has a single place in which all

scalars are defined. But it is also possible to split up the scalar

mapping over multiple modules. This can be useful if you have a piece

of code where some scalars naturally lives in a sub-application of some

kind.

Interfaces & Unions

In GraphQL, two kinds of abstract types are defined: interfaces and unions. Interfaces abstract over concrete types which have some fields in common (and thus the fields must also agree on types). Unions abstract over concrete types that has nothing in common and thus simply defines a heterogeneous collection of types.

For the GraphQL system to operate correctly, execution must have a way to take an abstract type and make it concrete. Say, for instance, you have just loaded an object of type Node. We don’t yet know that it is a starship, but if the programmer fragment expands on the Starship

query Q($nid : ID!) {

node(id: $nid) {

... on Starship {

model

}

}

}we need to know if the concrete node loaded indeed was a starship. The type resolver is responsible for figuring out what concrete type we have. Commonly, we map both the interface type and the union type to the same resolver.

The reason this needs to be handled by the programmer is because the GraphQL system doesn’t know about your representation. In turn, it will call back into your code in order to learn which type your representation has.

Objects

The mapping of objects is likely to have a special mapping for each object type you have defined. This is because each kind of (output) object tends to be different and requires its own handler.

Note that it is possible to define the type of the object as an

atom() here. This is common in GraphQL for Erlang. You can write

definitions as either atoms or binaries. They are most often returned

as binaries at the moment, however.

| The choice of using binaries may turn out to be wrong. We’ve toyed with different representations of this, and none of them fell out like we wanted. However, because the nature of GraphQL makes sure that an enemy cannot generate arbitrary atoms in the system, we could use atoms in a later version of GraphQL. For now, however, most parts of the system accepts atoms or binaries, and converts data to binaries internally. |

Scalar Resolution

In a GraphQL specification, the structure of queries are defined by objects, interfaces and unions. But the “ground” types initially consist of a small set of standard types:

-

Int—Integer values

-

Float—Floating point values

-

String—Textual strings

-

Boolean—Boolean values

-

ID—Identifiers: values which are opaque to the client

These ground types are called Scalars. The set of scalars is extensible with your own types. Some examples of typical scalars to extend a Schema by:

-

DateTime objects—with or without time zone information

-

Email addresses

-

URIs

-

Colors

-

Refined types—Floats in the range 0.0-1.0 for instance

Clients input scalar values as strings. Thus, the input string has to be input coerced by the GraphQL system. Vice versa, when a value is returned from the GraphQL backend, we must coerce so the client can handle it. This is called output coercion.

The advantage of coercing inputs from the client is that not only can we validate that the client sent something correct. We can also coerce different representations at the client side into a canonical one on the server side. This greatly simplifies the internals, as we can pick a different internal representation than one which the client operates with.

In particular, we can chose an internal representation which is unrepresentable on the client side. That is, the client could be Java or JavaScript and neither of those languages has a construct for tuples which is nice to work with. At least not when we consider JSON as a transport for those languages. Yet, due to canonicalization, we may still use tuples and atoms internally in our Erlang code, as long as we make sure to output-coerce values such that they are representable by the transport and by the client.

In the Star Wars schema, we have defined scalar DateTime which we

use to coerce datetimes. If a client supplies a datetime, we will run

that through the iso8601 parsing library and obtain a

calendar:datetime() tuple in Erlang. On output coercion, we will

convert it back into ISO8601/RFC3339 representation. This demonstrates

the common phenomenon in which an internal representation (tuples) are

not realizable in the external representation—yet we can work around

representation problems through coercion:

sw.schema)scalar DateTimeWe have arranged that data loaded into Mnesia undergoes iso8601

conversion by default such that the internal data are

calendar:datetime() objects in Mnesia. When output-coercing these

objects, the GraphQL system realizes they are of type DateTime. This

calls into the scalar conversion code we have mapped into the Star

Wars schema:

sw_core_scalar.erl)-module(sw_core_scalar).

-export([input/2, output/2]).

input(<<"DateTime">>, Input) ->

try iso8601:parse(Input) of

DateTime -> {ok, DateTime}

catch

error:badarg ->

{error, bad_date}

end;

input(_Type, Val) ->

{ok, Val}.

output(<<"DateTime">>, DateTime) ->

{ok, iso8601:format(DateTime)};

output(_Type, Val) ->

{ok, Val}.A scalar coercion is a pair of two functions

- input/2

-

Called whenever an scalar value needs to be coerced from client to server. The valid responses are

{ok, Val} | {error, Reason}. The converted response is substituted into the query so the rest of the code can work with converted vales only. If{error, Reason}is returned, the query is failed. This can be used to white-list certain inputs only and serves as a correctness/security feature. - output/2

-

Called whenever an scalar value needs to be coerced from server to client. The valid responses are

{ok, Val} | {error, Reason}. Conversion makes sure that a client only sees coerced values. If an error is returned, the field is regarded as an error. It will be replaced by anulland Null Propagation will occur.

In our scalar conversion pair, we handle DateTime by using the

iso8601 module to convert to/from ISO8601 representation. We also

handle other manually defined scalar values by simply passing them

through.

| Built-in scalars such as Int, Float, String, Bool are handled by the system internally and do not currently undergo Scalar conversion. A special case exists for Int and Float. These are coerced between automatically if it is safe to do so.[2] |

Example

Consider the following GraphQL query:

query SpeciesQ {

node(id: "U3BlY2llczoxNQ==") {

id

... on Species {

name

created

}

}

}which returns the following response:

{

"data": {

"node": {

"created": "2014-12-20T09:48:02Z",

"id": "U3BlY2llczoxNQ==",

"name": "Twi'lek"

}

}

}The id given here can be decoded to "Species:15". We can use the

Erlang shell to read in that species:

(sw@127.0.0.1)1> rr(sw_core_db). % (1)

[film,person,planet,sequences,species,starship,transport,

vehicle]

(sw@127.0.0.1)2> mnesia:dirty_read(species, 15).

[#species{id = 15,

edited = {{2014,12,20},{21,36,42}},

created = {{2014,12,20},{9,48,2}}, (2)

classification = <<"mammals">>,name = <<"Twi'lek">>,

designation = undefined,

eye_colors = [<<"blue">>,<<"brown">>,<<"orange">>,

<<"pink">>],

...}]| 1 | Tell EShell where the records live so we can get better printing in the shell. |

| 2 | Note the representation in the backend. |

When the field created is requested, the system will return it as

{{2014,12,20},{9,48,2}} and because it has type DateTime it will

undergo output coercion to the ISO8601 representation.

How the field is requested and fetched out of the Mnesia database is described in the section Object Resolution.

Enum Resolution

GraphQL defines a special kind of scalar type, namely the enum type. An enumerated type is a one which can take a closed set of values, only.

By convention, GraphQL systems tend to define these as all upper-case letters, but that is merely a convention to make them easy to distinguish from other things in a GraphQL query document.

Erlang requires some thought about these. On one hand, we have an

obvious representation internally in Erlang by using an atom() type,

but these are not without their drawbacks:

-

The table of atoms are limited in Erlang. So if you can create them freely, you end up exhausting the atom table eventually. Thus, you cannot have an “enemy” create them.

-

In Erlang, atoms which begin with an upper-case letter has to be quoted. This is not always desirable.

-

Many transport formats, database backends and so on does not support atom types well. They don’t have a representation of what scheme calls a "symbol". So in that case they need handling.

Because of this, the Erlang GraphQL defines an enum mapping construction exactly like the one we have for Scalar Resolution. This allows the programmer to translate enums as they enter or leave the system. This provides the ability to change the data format to something which has affordance in the rest of the system. In short, enums undergo coercion just like any other value.

In GraphQL, there are two paths for inputting an enum value: query document and query parameters. In the query document an enum is given as an unquoted value. It is not legal to input an enum as a string in the query document (presumably to eliminate some errors up front). In contrast, in the parameter values, we are at the whim of its encoding. JSON is prevalent here and it doesn’t have any encoding of enums. Hence, they are passed as strings here.

In order to simplify the input coercion code for these, we always pass them to coercers as binary data. This makes it such that developers only have to cater for one path here.

Defining enums

You define enum values in the schema as mandated by the GraphQL specification. In the Star Wars schema, we define the different film episodes like so

enum Episode {

PHANTOM

CLONES

SITH

NEWHOPE

EMPIRE

JEDI

}which defines a new enum type Episode with the possible values

PHANTOM, CLONES, ….

Coercion

In order to handle these enum values internally inside a server, we need a way to translate these enum values. This is done by a coercer module, just like [scalar-representation]. First, we introduce a mapping rule

#{ ...

enums => #{ 'Episode' => sw_core_enum },

... }

In the schema mapping (see Mapping rules for the full

explanation). This means that the type Episode is handled by the

coercer module sw_core_enum.

The module follows the same structure as in Scalar Resolution. You

define two functions, input/2 and output/2 which handle the

translation from external to internal representation and vice versa.

-module(sw_core_enum).

-export([input/2, output/2]).

%% Input mapping (1)

input(<<"Episode">>, <<"PHANTOM">>) -> {ok, 'PHANTOM'};

input(<<"Episode">>, <<"CLONES">>) -> {ok, 'CLONES'};

input(<<"Episode">>, <<"SITH">>) -> {ok, 'SITH'};

input(<<"Episode">>, <<"NEWHOPE">>) -> {ok, 'NEWHOPE'};

input(<<"Episode">>, <<"EMPIRE">>) -> {ok, 'EMPIRE'};

input(<<"Episode">>, <<"JEDI">>) -> {ok, 'JEDI'}.

%% Output mapping (2)

output(<<"Episode">>, Episode) ->

{ok, atom_to_binary(Episode, utf8)}.| 1 | Conversion in the External → Internal direction |

| 2 | Conversion in the Internal → External direction |

In the example we turn binary data from the outside into appropriate atoms on the inside. This is useful in the case of our Star Wars system because the Mnesia database is able to handle atoms directly. This code also protects our system against creating illegal atoms: partially because the coercer module cannot generate them, but also because the GraphQL type checker rejects values which are not valid enums in the schema.

In the output direction, our values are already the right ones, so we can just turn them into binaries.

The GraphQL system doesn’t trust an output coercion function. It

will check that the result indeed matches a valid enum value. If it

doesn’t the system will null the value and produce an error with an

appropriately set path component.

|

Usage Example

In GraphQL, we can run a query which asks for a film by its episode enum and then obtain some information on the film in question:

query FilmQuery {

filmByEpisode(episode: JEDI) {

id

title

episodeID

episode

}

}Note how we use the value JEDI as an enum value for the episode in

question. The GraphQL type checker, or your GraphiQL system will

report errors if you misuse the enum value in this case.

The output is as one expects from GraphQL:

{ "data" :

{ "filmByEpisode" :

{ "episode" : "JEDI",

"episodeID" : 6,

"id" : "RmlsbToz",

"title" : "Return of the Jedi"

}

}

}Here, the field episode returns the string "JEDI" because the

JSON output has no way of representing an enum value. This is the

GraphQL default convention in this case. Likewise, enum input as a

query parameter, e.g. as part of query Q($episode : Episode) { …

}, should set the $episode value to be a string:

{ "episode" : "EMPIRE",

...

}Which will be interpreted by the Erlang GraphQL as an enumerated value.

Type Resolution

In GraphQL, certain types are abstract. These are interfaces and unions. When the GraphQL system encounters an abstract type, it must have a way to resolve those abstract types into concrete (output) types. This is handled by the type resolution mapping.

The executor of GraphQL queries uses the type resolver when it wants to make an abstract object concrete. The executor can then continue working with the concretized object and thus determine if fragments should expand and so on.

A type resolver takes an Erlang term as input and provides a resolved type as output:

-spec execute(Term) -> {ok, Type} | {error, Reason}

when

Term :: term(),

Type :: atom(),

Reason :: term().The Term is often some piece of data loaded from a database, but it

can be any representation of data in the Erlang system. The purpose of

the execute/1 function is to analyze that data and return what type

it belongs to (as an atom). In our case, we can assume resolution

works on Mnesia objects. Hence, by matching on Mnesia objects, we can

resolve the type in the Graph of those objects (file: sw_core_type.erl):

execute(#film{}) -> {ok, 'Film'};

execute(#person{}) -> {ok, 'Person'};

execute(#planet{}) -> {ok, 'Planet'};

execute(#species{}) -> {ok, 'Species'};

execute(#starship{}) -> {ok, 'Starship'};

execute(#transport{}) -> {ok, 'Transport'};

execute(#vehicle{}) -> {ok, 'Vehicle'};

execute(#faction{}) -> {ok, 'Faction'};

execute(#{ starship := _, transport := _ }) -> {ok, 'Starship'};

execute(#{ vehicle := _, transport := _ }) -> {ok, 'Vehicle'};

execute(_Otherwise) -> {error, unknown_type}.| In larger implementations, you often use multiple type resolvers and use the mapping rules to handle different abstract types via different resolvers. Also, type resolution is likely to forward decisions to other modules and merely act as a dispatch layer for the real code. The current implementation allows for a great deal of flexibility for this reason. |

Use pattern matching in the execute/1 function to vary what kinds of

data you can process. Do not be afraid to wrap your objects into other

objects if that makes it easier to process. Since you can handle any

Erlang term, you can often wrap your objects in a map of metadata and

use the metadata for figuring out the type of the object. See

Object Representation for a discussion of commonly used variants.

Object Resolution

The meat of a GraphQL system is the resolver for objects. You have a concrete object on which you are resolving fields. As we shall see, this is used for two kinds of things a client wants to do with the graph.

First, field resolution on objects are used because you have a loaded object and the client has requested some specific fields on that object. For example, you may have loaded a Starship and you might be requesting the name of the Starship.

Second, field resolution on objects is used to derive values from

other values. Suppose a Starship had two fields internally

cargoCapacity and cargoLoad. We might want to compute the load

factor of the Starship as a value between 0.0 and 1.0. This amounts to

running the computation CargoLoad / CargoCapacity. Rather than

storing this value in the data, we can just compute it by derivation

if the client happens to request the field. Otherwise, we abstain from

computing it.

An advantage of derivation is that you can handle things lazily. Once the client wants a field, you start doing the work for computing and returning that field. Also, derivation improves data normalization in many cases. Modern computers are fast and data fetches tend to be the major part of a client request. A bit of computation before returning data is rarely going to be dominant in the grand scheme of things.

Finally, field resolution on objects is used to fetch objects from

a backend data store. Consider the field node(id: ID!) on the

Query object in the schema:

type Query {

+description(text: "Relay Modern specification Node fetcher")

node(id : ID!) : Node

+description(text: "Fetch a starship with a given Id")

starship(id : ID!) : Starship

allStarships : [Starship]

allPlanets : [Planet]

allPeople : [Person]

allVehicles : [Vehicle]

allSpecies : [Species]

allFilms : [Film]

filmByEpisode(episode: Episode) : Film!

}When a query starts executing, an initial_term is injected in by

the developer. By convention this object is often either null or